This series takes a deeper look at how our engineers build digital financial services. Learn more about how they address large-scale technical challenges at Tala.

By: Bibin Sebastian, Engineer Manager

Have you ever wondered how successful digital businesses understand their customer needs, why their website is designed a particular way, or how they make quick data-driven decisions?

A/B testing is one of the most popular and effective techniques used to understand your customers at a scalable level, unlocking the insights necessary to provide the best experience and value to your customers.

What Is A/B Testing?

It is best to explain what an A/B test is with an example.

Imagine that your team owns the homepage of an e-commerce website. Arguably, it is the most important page for your site as almost all the users land on this page first. The homepage has a search widget that needs a new UI design to improve searches on the site. You have to come up with a new design based on a hypothesis. Now, how do you know if your hypothesis is correct and will produce the expected results?

That’s where A/B tests come in — you can use an A/B test to test the new UI design on your real users and see what performs best.

An A/B test consists of two versions: a control version and a variant version. Control represents the existing version. Variant is the new version you want to test. So, in this particular case, control represents the existing search widget (A) and the variant is the new search widget design (B) you want to test.

For accurate results, A/B testing is limited to one variable. However, an extension of the A/B test is a multivariate test where you will have multiple variants to assess multiple variables through a series of combinations.

The idea behind A/B testing is to then display each version of the search widget to a separate cohort of your users. For instance, 50% of the users will get the existing version of the search widget (A), and the remaining 50% will get the new design (B). To identify the winner, measure and compare the number of searches from both these versions.

The beauty of A/B testing is the efficient feedback on your hypothesis from your customers, eliminating any guesswork when it comes to validating your product and design choices. This is a data-driven approach, making it easy to measure and analyze the impact.

How to Design A/B Tests?

A systematic approach to A/B testing ensures that you get the best results. It requires quite some preparation and groundwork to develop A/B test use cases that will get the best outcome. At a high level, there are three main stages of A/B testing.

1) Researching and Prioritizing the Hypothesis to be Tested

Typically you plan an A/B test when you want to improve on something about your business, so it is important to do some research on your business use cases, analyze and collect data points, and come up with a list of hypotheses to be tested, aligned with your business goals. When it comes to testing your hypotheses, it is best to prioritize the list and go with a step-by-step approach, testing one or a few hypotheses at a time.

It is important to note that you have to identify a metric (or multiple metrics) to measure your test. In the above example of the search widget, the metric used is “number of searches” from the widget.

2) Executing the Tests

For each test hypothesis, you need to identify the control and variant versions. Then, you need to decide on the percentage split: what percentage of users should see control versus variant versions. You will then configure the percentage split and deploy your A/B test. The duration of the test is critical as a significant amount of data is needed to measure the test according to your metrics. The duration is calculated based on the number of active users, variants under test, and the percentage of users in each variant.

3) Measuring the Test Results and Deploying the Winner

Once you have enough data points, you can measure the metrics to see which version stands out in comparison, adopting the winner. If you are not satisfied with the results, use these learnings to formulate further A/B tests and get better results.

Engineering Considerations for A/B Testing

If you are planning to embrace A/B testing practices, from an engineering standpoint, these are the two important factors to consider:

1) Tooling

A good A/B testing tool is crucial for effective results. You can build a tool in-house or use one available on the market. Either way, make sure it has the following characteristics.

- The ability to configure the tests: You should be able to configure the control and variant versions and input parameters to base the split percentage and the split percentage itself.

- Ability to deploy tests quickly: Once you configure the tests in the tool, you’ll want it to be deployed for customer use. Hence, your tooling should easily integrate with your systems to get deployed to production environments quickly.

- Measure the test results: Your tool should be able to capture the metrics and provide options for comparing the results.

- Analyze the results: You should be able to analyze the past test results for continued learning.

2) Architecture Support for A/B Testing

To deploy A/B tests successfully in your organization, your software system architecture should have the necessary hooks built in. This is an architectural choice every organization has to make. A/B testing can be done at the application’s front end or in the back end. If you want to do front-end A/B testing, ensure the system which renders your front end is integrated with your testing tool; same thing with back end.

It is also a good practice to segregate which part of the application is testable and which is not. To return to the e-commerce application example, the homepage lands the most visitors, making it the most effective page for experimentation.

How Do You Implement A/B Tests in Your Code?

For the purposes of this explanation, let’s assume that the A/B testing tool you use provides a library or API for integration. So in simple terms, your code will look like this:

treatment = getTreatment()

if (treatment == "on") {

// display variant version

} else {

// display control version

}

function getTreatment () {

// fetch the treatment from the A/B testing tool.

}If you have configured a 50:50 split between the control and variant in your tool, the getTreatment() method will return the value “on” only for 50% of your base. So 50% of your users will see the variant version, and the rest will see the control version.

A/B Testing at Tala

At Tala, we care deeply about providing the best experience to our users. We run tests on the Tala Android mobile app to understand user behavior, build the right product, and provide the best value to our customers.

Here are two big A/B tests we deployed in 2022. Following the best practices we’ve outlined, our team developed these tests after conducting robust research and analysis on our business metrics, brainstorming, and prioritizing to create the best value for our customers.

Installment MVP

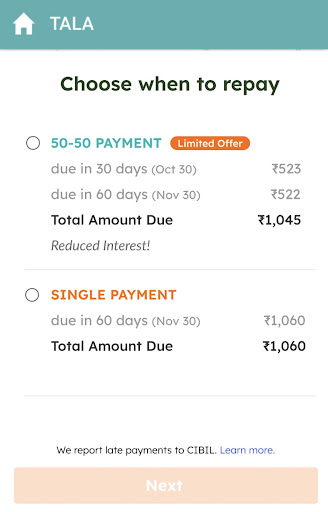

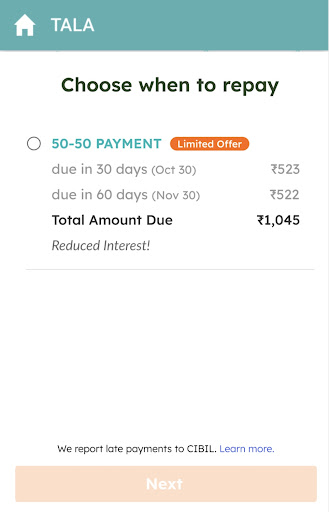

Tala’s Installment MVP feature was a test to understand the adoption of installment products among our India customer base. Since our current loan offering only had a one-time repayment option (control version), we wanted to see how customers would respond to multiple payment options (aka installments) and see if this flexibility helps them to repay the loan on time.

We introduced two variants: variant 1 (with both installment and single payment options) and variant 2 (with only installment option). Variant 1 was decided to understand which option the customer would opt for when given a choice. Variant 2 was to understand if the customer would proceed with it if only given an installment option. We deployed the experiment with a 33% (control): 33% (Variant 1): 33% (Variant 2) split.

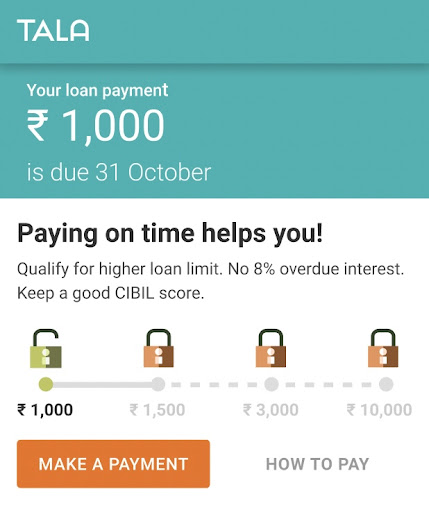

Laddering UI

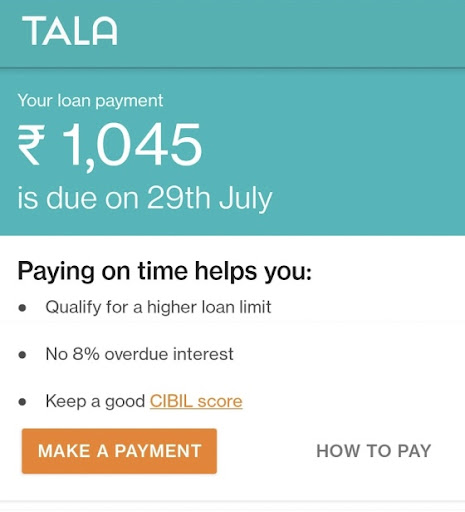

For this A/B test, we wanted to understand the best way to inform customers they get approval for higher loan amounts when they pay on time. Our hypothesis was by notifying customers that paying on time can unlock higher value loans — and ladder up over time — customers would be motivated to pay back loans early. The team had to do a user study, talk to some customers, and work on multiple UI designs to finalize the best way to message the customers:

- Control (with text under “Paying on time helps you” section)

- Variant (with slightly modified text and image under “Paying on time helps you” section)

A/B testing is a key part of Tala’s experimentation practice. We continue to conduct small and big tests to improve and optimize our app to serve our customers better every day. If you find this interesting and would like to partner and collaborate with us, we are always looking for curious and passionate minds to join us — we are hiring!