This article was originally published in Medium by Elliot Masor, Lead SDET at Tala.

At Tala, we encountered an interesting challenge to develop and test microservices. With so many services integrating together, how can we validate the expected behavior for each of our services in a controlled environment? The scenarios and user journeys are far too complex to validate from a frontend mobile application. Also, while unit tests are essential, they don’t fully validate integration with other services. We needed to create a layer of testing that could run in conjunction with unit testing in our continuous integration pipelines.

This led to the idea of service level integration testing, a layer of testing where each service is tested by itself in isolation. Adapted from unit testing strategies where each class is tested and its dependencies are mocked, service in isolation strategy tests each service and its external service dependencies are mocked. I developed Fake Dependency to serve as Tala’s core mock service for all external dependencies. When testing a service in isolation, the service under test thinks it’s communicating with the real external dependency, but it’s actually calling the ‘Fake Dependency’ service returning mocked test data.

Challenges with End to End Testing

There are various challenges when integration testing is only done from the frontend, either from a UI or hitting frontend RESTful API endpoints directly. This is also known as end to end testing. These challenges include:

- The business logic of each microservice cannot be easily tested from the frontend. There may be scenarios that rely on various data combinations that would be too challenging to orchestrate. This may involve highly complex database seeding.

- Test execution of 10s, or even 100s of microservices integrating together take a long time.

- When these tests fail, tracing of which service failed and why it failed can be challenging, especially without proper tracing.

- A bug in one service can cause tests to fail for another service, which can block a release for a service that doesn’t have any bugs.

- Bugs are introduced after new features are merged to the development environment, instead of before.

- These tests tend to be more flaky than service level integration tests because a bug in once service can break tests for another service.

While end to ending testing has its challenges, they are important for overall user journey validation, but should really be reserved for those key scenarios and overall service integration sanity checks. End to end tests should not be used for testing all acceptance criteria of all services.

Solution: Fake Dependency Service

We needed a solution to cover all acceptance criteria without writing them in automated end to end tests to allow us to focus on writing automated tests for each microservice in isolation. Enter: Fake Dependency Service. When a service is tested in isolation, all of its dependent services are mocked. This allows us to focus on the business logic of the service, instead of the whole user journey. So, instead of seeding data into the dependent services’ databases and calling their real endpoints, their endpoints are mocked or “faked” to return the data we set up. These dependent services can be internal services or 3rd party services. Some benefits of writing automated tests for services in isolation include:

- Bugs are caught when pull requests (PRs) are open, so the bugs don’t get merged into the development environment. This gives us fast feedback for bugs and the bugs can be fixed right on the broken branch.

- Tests run faster than end to end testing. Large data driven sets become feasible.

- Tests are less flaky than end to end because they don’t depend on other services being functional. A bug in another service won’t matter because it is mocked. Similar to unit testing, we test on the assumption that dependencies are working.

- We can start writing tests before dependent services are completed. Given that the design documents are completed and the API contracts are finalized for the dependent services, we can start writing our integration tests for the service being developed.

- Edge cases can be easily tested. We can test what would happen if a dependent service returns 500s or bad responses by mocking the response. In an end to end environment, that is not easily controllable.

- Test failures are simple to debug because we know which service under test is failing. There are lots of troubleshooting tools at our disposal such as: checking http proxy captures (i.e Charles Proxy), querying the database, checking the service logs, checking the test failure message.

- Test coverage becomes easier because we have more control of the external services behavior by mocking them.

- Performance testing can be done on services in isolation. This allows us to gather metrics and find bottlenecks on each service rather than the end to end user journey.

While the external API services are mocked, we should still integrate with some services. These include SQL databases, Redis, and Kafka. Integrating with these and not mocking them allows us to ensure those integration have no bugs. We test to be sure the queries work correctly, and event streaming is functional. We can even still integrate with AWS services. LocalStack is a great tool to test AWS integration without hitting real AWS, which saves on cost.

I wrote Fake Dependency Service in Spring Boot with Kotlin, but its usage is platform agnostic because its interface is through API calls. It’s also dockerized. The language of the service we are testing also does not matter. As long as the dependent service is RESTful with JSON bodies or SOAP with XML bodies, it can be mocked by Fake Dependency Service. Additionally, the language/framework of our automated tests can be any flavor. We can use anything including XUnit, Kotest, MochaJs, ScalaTest, Spock Framework, and more.

Figure 1 shows how instead of a Service Under Test making API calls to various other services, it only calls Fake Dependency Service. The automated specs use http clients to send all http requests. These http requests will only call 2 services: the Fake Dependency Service and the Service Under Test. Fake Dependency Service allows us to set up data to mock and verify requests sent to it. The Service Under Test hits Fake Dependency Service and gets back the data mocked from the setup. Configuring a service to hit a mock instead of the real endpoint should be as easy as having it in the configuration for the environment under test.

Fake Dependency & Continuous Integration

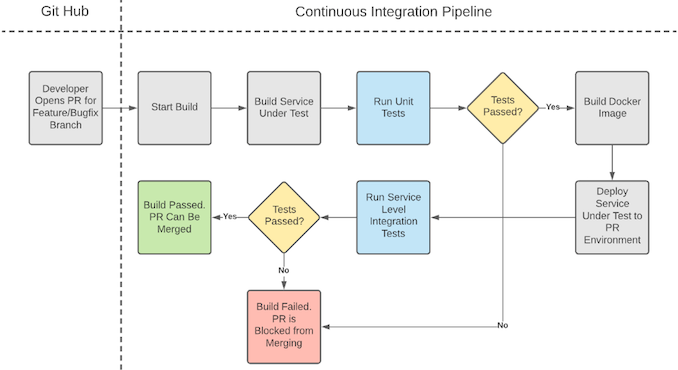

Fake Dependency Service is dockerized and provides an opportunity for additional layers of testing within a Continuous Integration framework. Similar to unit tests, writing tests at the service level catches bugs before they are released to the development environment. By adding the service level integration tests to the continuous integration pipeline, PRs introducing bugs can be blocked, preventing bugs from being merged.

Let’s say we had an environment for integration called PR Environment. It’s an environment where every microservice is configured to point to Fake Dependency Service instead of each other. In Dev, Qa, Stage, and Prod, they all integrate together. However, in the PR environment, they point to Fake Dependency Service so that every external service is mocked.

In our Continuous Integration (see Figure 2), when a PR is opened, that branch gets deployed to the PR Environment and all of the service level integration tests run there. If all unit and integration tests pass, then the PR can be merged. If either fails, then the PR is blocked from merging.

End to End vs Service in Isolation Environments

In end to end environments, all of the services integrate together. Production is an end to end environment, which is hit by real customers. Dev, QA, and Stage are end to end testing environments. They are not hit by real customers. There we test the highest priority user journeys. It is not feasible to test all acceptance criteria for all services.

In the Service in Isolation environment, all services integrate with Fake Dependency Service and never with each other. Testing all acceptance criteria is feasible because we have fine control of dependent service responses by mocking them. These tests run in the PR environment when developers open PRs to merge features and bug fixes. For example, if a PR is open in the repository of Service A, only the tests for Service A will run. Service A will hit Fake Dependency Service anytime it makes an external API request.

Example: Immunization Decider Service

I wrote an example microservice called Immunization Decider Service. It’s business domain to decide what immunizations a user is eligible to receive. Immunization Decider Service does not store any data in its database about the user or their immunization history, so it requests that information from User Service and Immunization History Service. Based on the user’s age and prior immunization history, it will decide what immunizations are available. The rules for eligibility factor in the user’s age and how long ago the user received any immunizations. The immunizations this service knows about are: COVID19, INFLUENZA, and TDAP.

Immunization Decider Service depends on three other services:

- Pharmacy Service

- User Service

- Immunization History Service

In order to test Immunization Decider Service, we need to mock all three dependent services using Fake Dependency Service.

Here is the flow:

- Pharmacy Service requests Immunization Decider to make a decision for a user. Immunization Decider Service returns a 202 immediately since the operation is async.

- Immunization Decider Service requests User Service for the user’s profile, which contains their age.

- Immunization Decider Service requests Immunization History for the user, which contains all prior immunization administrations with timestamps.

- Immunization Decider Service will make a decision, log the result in the db, and send a callback to Pharmacy Service with the result.

Here’s an example of an automated spec run from InitiateDecisionSpec, which used Charles Proxy to capture all http traffic. The captures are stripped down for compactness.

1. Mock User Service is setup to return a user whose age is 13 years.

POST /mock-service/user-service/mock-resources/users/6265893322863879296 HTTP/1.1

X-Request-ID: 9ca4ad86-d61e-4bf0-9b01-326b8a36c413

{"responseBody":{"userId":6265893322863879296,"dateOfBirth":"2009-01-18T19:14:49.716669Z","firstName":"8c8e9ed4e9434ab79fbc024b35e79b52","lastName":"0cff59d482324ec0babe42ebc9939a2b"},"responseSetUpMetadata":{"httpStatus":200,"delayMs":0}}

HTTP/1.1 2002. Mock Immunization History Service is setup to return 2 immunizations: Influenza was 500 days ago, and TDAP was 1000 days ago.

POST /mock-service/immunization-history-service/mock-resources/immunizations?userId=6265893322863879296 HTTP/1.1

X-Request-ID: 9ca4ad86-d61e-4bf0-9b01-326b8a36c413

{"responseBody":{"occurrences":[{"date":"2020-09-02T19:14:49.419481Z","type":"INFLUENZA"},{"date":"2019-04-21T19:14:49.421627Z","type":"TDAP"}]},"responseSetUpMetadata":{"httpStatus":200,"delayMs":0}}

HTTP/1.1 2003. Mock Pharmacy Service is setup for its callback endpoint.

POST /mock-service/pharmacy-service/mock-resources/immunizations/decisions?sourceRefId=eaffd7cd-b933-455c-b4fe-25ee976bb367 HTTP/1.1

X-Request-ID: 9ca4ad86-d61e-4bf0-9b01-326b8a36c413

{"responseBody":{},"responseSetUpMetadata":{"httpStatus":200,"delayMs":0}}

HTTP/1.1 2004. Call Immunization Decider Service to initiate a decision for the user under test.

POST /immunization-decider/decisions HTTP/1.1

X-Request-ID: 9ca4ad86-d61e-4bf0-9b01-326b8a36c413

{"sourceRefId":"eaffd7cd-b933-455c-b4fe-25ee976bb367","userId":"6265893322863879296"}

HTTP/1.1 2025. Call Immunization Decider Service to get the decision. Since the decision is async, we have to retry calling this endpoint until the status is no longer IN_PROGRESS.

GET /immunization-decider/decisions?sourceRefId=eaffd7cd-b933-455c-b4fe-25ee976bb367 HTTP/1.1

X-Request-ID: 9ca4ad86-d61e-4bf0-9b01-326b8a36c413

HTTP/1.1 200

{"sourceRefId":"eaffd7cd-b933-455c-b4fe-25ee976bb367","userId":6265893322863879296,"status":"IN_PROGRESS","availableImmunizations":[],"startedAt":"2022-01-15T19:14:50Z","finishedAt":null,"error":null}

GET /immunization-decider/decisions?sourceRefId=eaffd7cd-b933-455c-b4fe-25ee976bb367 HTTP/1.1

X-Request-ID: 9ca4ad86-d61e-4bf0-9b01-326b8a36c413

HTTP/1.1 200

{"sourceRefId":"eaffd7cd-b933-455c-b4fe-25ee976bb367","userId":6265893322863879296,"status":"SUCCESS","availableImmunizations":["INFLUENZA"],"startedAt":"2022-01-15T19:14:50Z","finishedAt":"2022-01-15T19:14:51Z","error":null}6. Verify the callback made to Mock Pharmacy Service.

GET /mock-service/pharmacy-service/mock-resources/immunizations/decisions?sourceRefId=eaffd7cd-b933-455c-b4fe-25ee976bb367 HTTP/1.1

X-Request-ID: 9ca4ad86-d61e-4bf0-9b01-326b8a36c413

HTTP/1.1 200

[{"sourceRefId":"eaffd7cd-b933-455c-b4fe-25ee976bb367","userId":6265893322863879296,"status":"SUCCESS","availableImmunizations":["INFLUENZA"],"startedAt":"2022-01-15T19:14:50.000000Z","finishedAt":"2022-01-15T19:14:51.000000Z"}]The content from steps 5 and 6 should be equivalent. In the response, it contains the status and availableImmunizations , which are expected to be SUCCESS and [“INFLUENZA”] respectively.

Data drive testing is an opportunistic way to cover all acceptance criteria with reusable code in a readable format (see Figure 6). The 2 leftmost columns represent the conditional scenarios that alter how the test is seeded. The rightmost column represents the expected behavior, which changes based on the seeded data.

Use Fake Dependency Service for Your Own Testing

Fake Dependency Service is located here on GitHub. It is free and open source under MIT License. This repository serves as a strategic guide on how to design automation specs using Fake Dependency Service as a mock service. In it we have:

- The Fake Dependency Service.

- The Immunization Decider Service, which is an example implementation of a service under test.

- An example http client library.

- An example suite of automation specs for the microservice, utilizing the http client library to send all http requests.

The README is an extensive guide reviewing the following:

- Features of Fake Dependency Service.

- Features of the Immunization Decider Service.

- How to run the services locally in docker.

- How to run the automated specs locally.

- Design patterns for http clients for testing and usage in backend services.

- Design patterns for automation specs.

Final Thoughts

In microservice architecture, multiple services integrate together to execute the workflows of user journeys. These services are separated by concern instead of clumped into a single large monolith. This separation provides testing opportunities to test each service in isolation instead of just testing from the front end user perspective.

From a unit testing perspective, well designed applications separate each class by responsibility and use dependency injection. By doing so, all dependencies are mockable, allowing for near 100% code coverage in the unit tests. Similarly, microservices separated by responsibility allow for integration testing at the service level. Similar to how Mockito, MOQ, and MockK provide mocking features at the class level for unit tests, Fake Dependency Service provides mocking features at the service level for integration tests.